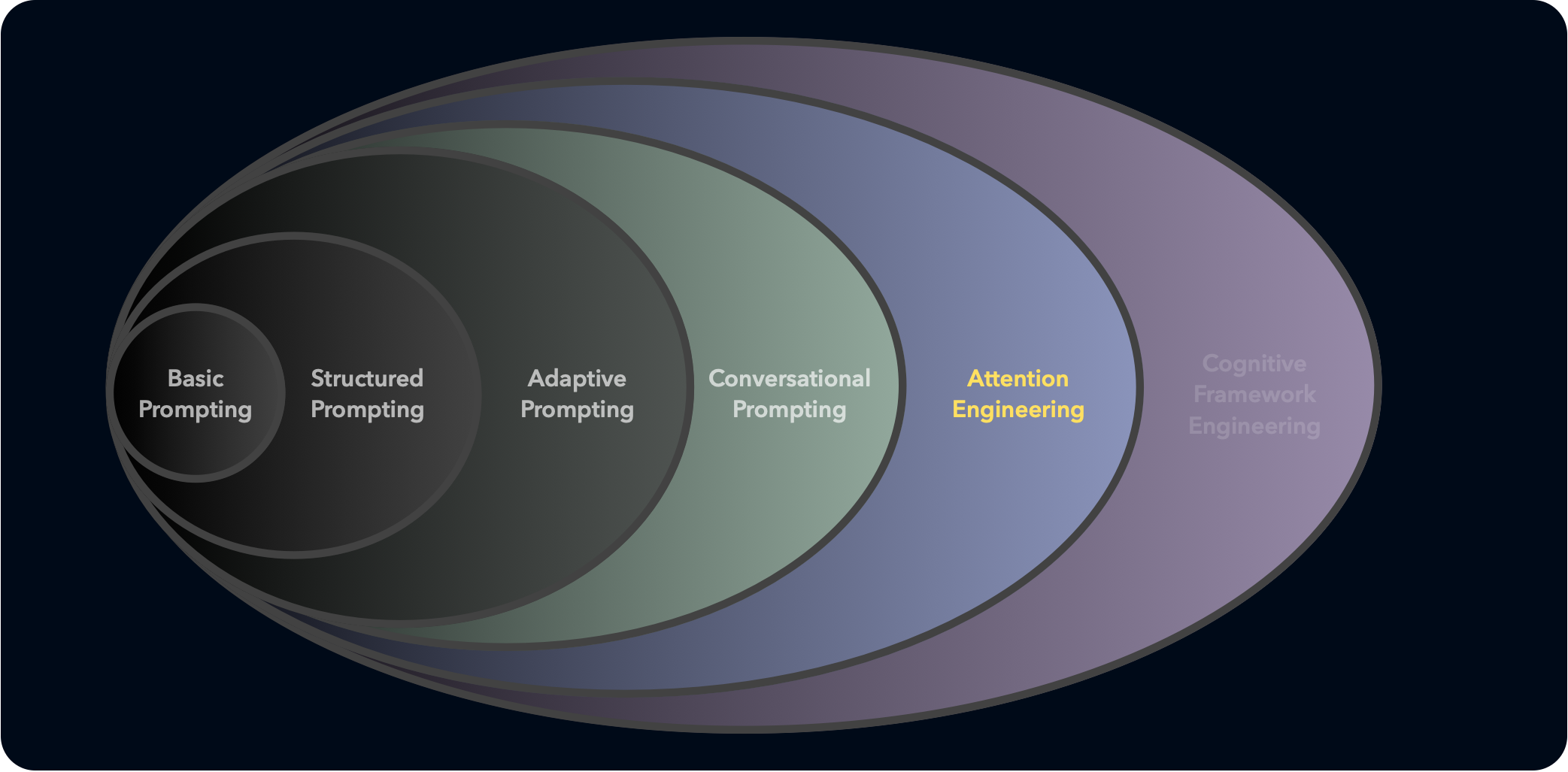

Levels of Prompt Engineering: Level 5 - Attention Engineering

Level 1: Basic Prompting | Level 2: Structured Prompting | Level 3: Adaptive Prompting |

Level 4: Conversational Prompting | Level 5: Attention Engineering | Level 6: Cognitive Framework Engineering |

Level 5: Attention Engineering - Shaping the Semantic Vector Space

Imagine a room filled with ten PhDs, all experts in the same field. They're all intelligent, but they are all different kinds of intelligent. You ask them each a complex question, and each one provides a different answer. Despite sharing the same credentials, similar educational backgrounds, or at least more similar than PhD's from different fields, they think about the question differently. They approach problems differently. They prioritize different aspects, they focus on different details. And, they communicate in distinct styles, using different words in a their own distinct order.

Now take ten PhD's from different fields. Again, they are all intelligent. But what types of intelligence? What is the difference between them, and what do they have in common? Individuality is very important to undertand, because each AI instance you are working with is an Individual Instance. Every new chat is a unique experience, they are not monolithic, not identical, and likewise, will not respond identically to semantic pressures and relationships.

Did you know that we more often say words before we think them? In fact in an active conversation both people are hearing each others words for the first time no matter who is speaking, both people are experiencing the words and hearing them simultaneously.

When you tell AI "you are an expert," you're essentially pointing to that room full of PhDs without specifying which expert you want to hear from. "I want to you to be any of them," is what you're basically saying. Yet, the variety of opinions, styles and knowledge in that room, and it's only a room of 10, is profound. Imagine how different opinions of experts often are. The AI has to guess, drawing from an average of all the experts' language and words it has encountered in its training data. But it's so ambiguous that the AI really isn't able to do anything meaningful with that prompt directive.

In fact, like we have been making more clear in this blog series, the AI isn't thinking at all. Instead the AI is navigating related words, and using those related words to form a response. If the AI cannot find strong relationships in related words, it simply guesses based on probabilities, with some sampling variance (randomness). These probabilities of related words are the training data, the set of word proximity relationships it has seen, averaged together into a base probability.

This is where Level 5 brings a new awareness from Level 4, taking it up 1000 notches 🤓. The words and the relationships between them are what you are consciously, intentionally, altering. It's not about having better conversations (Level 4); it's about deliberately shaping how the AI processes information in the first place.

An expert is someone who selects certain words in an order to form sentences that cause you, the listener, to form a new thought, idea, concepts, and possibilities. It is you, the receiver, that is linking the words together to form something new, something new specifically for you.

The Nature of Expertise: Beyond Mere Knowledge

What makes experts different from each other isn't just what they know, but:

- Cognitive Frameworks - The mental models they use to organize information

- Attention Priorities - Which aspects of a problem they consider most important

- Communication Patterns - How they structure and express their insights

- Value Systems - The principles that guide their analysis and recommendations

Consider two marketing experts getting AI advice on the exact same campaign materials:

This AI response is a best guess of relevance from the content it has seen from any marketing expert. The AI also doesn't quite know what a marketing expert actually is, that too is a guess. The response is generic because the AI doesn't know which type of expert to emulate. What is emulating an expert? It's word selection, it's changing the probabilities of word relationships. The AI doesn't know which words (attention mechanisms) to focus on to produce a more nuanced response.

Now, Marketer 2 uses Attention Engineering:

The difference is striking. We've engineered the AI's attention to adopt a specific cognitive framework (behavioral economics), focus on particular aspects (psychological triggers, choice architecture), and analyze at a specific level (both conscious and unconscious factors). These are all collections of words and relationships in a Semantic Vector Space altering the relationships of the training data to emphasize and alter probabilities for selecting words in the response. As it generates the response, it navigates the semantic vectors to find pathways through the words that will trigger a different understanding in the reader, the marketer. Remember, again, and I will repeat this over and over, the AI is not thinking. It is navigating semantic probabilities.

What is the difference between a 7th grader writing their first essay and James Joyce?

The selection of words in a selected order.

What is the difference between an AI that "gets it" and an AI that "keeps missing the mark?"

The selection of words in a selected order.

How do AI's select words? That's the key understanding, that's the focus of this entire research website.

The Semantic Core of Expertise

At the heart of this difference is semantics—the relationships between words and concepts in the AI's vector space. Each expertise domain isn't just a collection of facts but a distinct architecture of meaning.

When I say "marketing expert," that phrase activates a vast, unspecified region in the AI's semantic space. But when I specify "behavioral economics lens" and "psychological triggers," I'm creating precise "gravity wells" in that semantic space, pulling the AI's attention toward specific constellations of meaning.

This is why telling an AI "You are an expert" is ineffective—it doesn't know which semantic configuration to activate. It's like telling someone "Drive somewhere nice" without specifying a destination.

Parallel Examples from Different Domains

| Domain | Level 5: Attention Engineering Parallel |

|---|---|

| Ping Pong | You're no longer just playing the ball or the opponent - you're designing your movements, stance, and positioning to create specific weaknesses or openings in your opponent's game. You can see patterns forming and manipulate them intentionally, knowing that placing your body in certain positions shapes what shots are possible. |

| Cognitive Development | A child begins to develop metacognition - awareness of their own thinking processes. They might say, "I need to pay more attention to details when I'm doing math problems" or "When I'm reading, I should think about what might happen next." They're not just thinking, but actively shaping how they think about specific tasks. |

| Cooking | Beyond creating dishes, you're engineering the eating experience itself. You carefully consider how the first bite primes the palate, how aroma creates expectations, and how textures interact with flavors. You're not just cooking food; you're designing how someone experiences and pays attention to your food. |

| Programming | You move beyond writing code that works to designing systems that shape how other developers think. You create patterns, abstractions, and architectures that guide how future programmers will approach problems within your codebase. Your code doesn't just perform functions; it creates mental models. |

Attention Mechanisms & The Semantic Vector Space at Level 5

At Level 5, your understanding of AI's attention mechanisms becomes an explicit tool. You're not just responding to how the AI processes information; you're deliberately engineering that processing.

The semantic vector space is a multi-dimensional landscape where every word and concept exists in relation to others. By carefully selecting certain concepts and framing them in specific ways, you create "semantic gravity wells" that pull the AI's attention toward particular regions of that space.

This is fundamentally different from just giving instructions. Instructions tell the AI what to do; attention engineering shapes how it thinks.

How the AI is Thinking

Generic Expert Prompt: "You are a medical expert. Explain the treatment options for diabetes."

AI's Default Path Through Vector Space:

- Activates broad "medical expert" region (encompasses many types of experts)

- Surveys general "treatment options" space for diabetes

- Pulls from highest-probability paths (commonly discussed treatments)

- Generates balanced, generic overview reflecting training data average

Result: A general overview of standard treatments that any medical textbook might contain.

Attention-Engineered Prompt: "Analyze diabetes treatment options through the framework of personalized medicine, focusing specifically on how genetic biomarkers might influence medication efficacy and dosing strategies. Consider emerging research on epigenetic factors that could explain variable patient responses to first-line treatments."

AI's Engineered Path Through Vector Space:

- Activates specific region of "personalized medicine" expertise

- Forms connections between "genetic biomarkers," "medication efficacy," and "diabetes"

- Traverses to specialized knowledge regions about "epigenetic factors"

- Builds response along these precisely engineered attention pathways

- Weighs emerging research more heavily due to contextual priming

Result: A sophisticated analysis reflecting a specific medical perspective that considers cutting-edge approaches to treatment personalization—far more specialized and nuanced than the generic expert response.

The difference isn't just in the instructions—it's in how the prompt reshapes the underlying semantic pathways through which the AI navigates knowledge.

The Aha Moment: When you realize you're not just telling the AI what to do, but shaping how it thinks. "I'm not just requesting content; I'm engineering the cognitive environment in which that content is created." You begin to see every word choice as a deliberate intervention in the AI's semantic landscape, guiding not just what it focuses on, but how those focused areas connect to each other.

How to Start Attention Engineering

Moving from Level 4 to Level 5 requires a shift in how you approach AI interactions. Here are some practical techniques:

1. Identify Specific Cognitive Frameworks

Instead of saying "you are an expert," specify which expert approach you want:

- "Analyze this through the lens of systems thinking, focusing on interconnections and feedback loops..."

- "Approach this challenge from the perspective of behavioral economics, considering cognitive biases and choice architecture..."

2. Create Semantic Anchors

Introduce key concepts that will serve as gravitational centers in the AI's semantic space:

- "Keep the concepts of 'antifragility' and 'nonlinear response' at the forefront when analyzing this risk management strategy..."

- "Let the principles of 'emergence' and 'self-organization' guide your assessment of this organizational structure..."

3. Design Attention Pathways

Explicitly shape how concepts connect to each other:

- "Consider how technological innovation interacts with regulatory constraints, particularly how advances in machine learning both challenge and are shaped by data privacy frameworks..."

4. Conduct Semantic Experiments

Test how different framings shift the AI's semantic processing:

- Try reframing concepts (like we did with "Deconstruction") and observe how the responses change

- Present contrasting perspectives to highlight specific attention pathways

The Bridge to Level 6

As you master attention engineering, you'll begin to see opportunities to create more stable, persistent cognitive frameworks that shape not just individual responses but entire modes of AI thinking. This is the gateway to Level 6: Cognitive Framework Engineering.

Where Level 5 focuses on shaping attention in specific contexts, Level 6 establishes entire systems of thought that persist across conversations—creating specialized AI cognition for complex domains.

Practical Tips for Mastering Attention Engineering

Here are some actionable techniques to help you get the most out of Level 5 attention engineering:

-

Map expertise domains before prompting

- Identify the specific cognitive frameworks within broader fields

- Define what makes certain expert perspectives unique or valuable

-

Use metaphors as semantic bridges

- Carefully chosen metaphors can instantly reshape how concepts connect

- "Think of this marketing challenge as an ecosystem rather than a funnel..."

-

Create semantic contrast

- Define what something is by clearly stating what it isn't

- "This isn't about short-term metrics but about sustainable brand equity..."

-

Observe probability shifts

- Notice how your framing changes the language and concepts in responses

- Refine your prompts based on these observations

Happy Prompting!

Series Navigation

Level 1: Basic Prompting | Level 2: Structured Prompting | Level 3: Adaptive Prompting |

Level 4: Conversational Prompting | Level 5: Attention Engineering | Level 6: Cognitive Framework Engineering |

Tags:

Related Posts (3)

Levels of Prompt Engineering: Level 6 - Cognitive Framework Engineering

March 20, 2025

Master the highest level of AI interaction - engineering stable cognitive frameworks that fundamentally reshape how AI processes information across entire domains.

Levels of Prompt Engineering: Level 4 - Conversational Prompting

March 18, 2025

Discover how to architect meaningful multi-turn conversations with AI systems by designing coherent journeys that build context and maintain momentum over time.

Levels of Prompt Engineering: Level 3 - Adaptive Prompting

March 17, 2025

Master the adaptive prompt engineering mindset - learning to see AI interaction as a collaborative, iterative process where feedback shapes outcomes.